DeepSeek-V2: Redefining AI Efficiency with Multi-Head Latent Attention (MLA)

Introduction

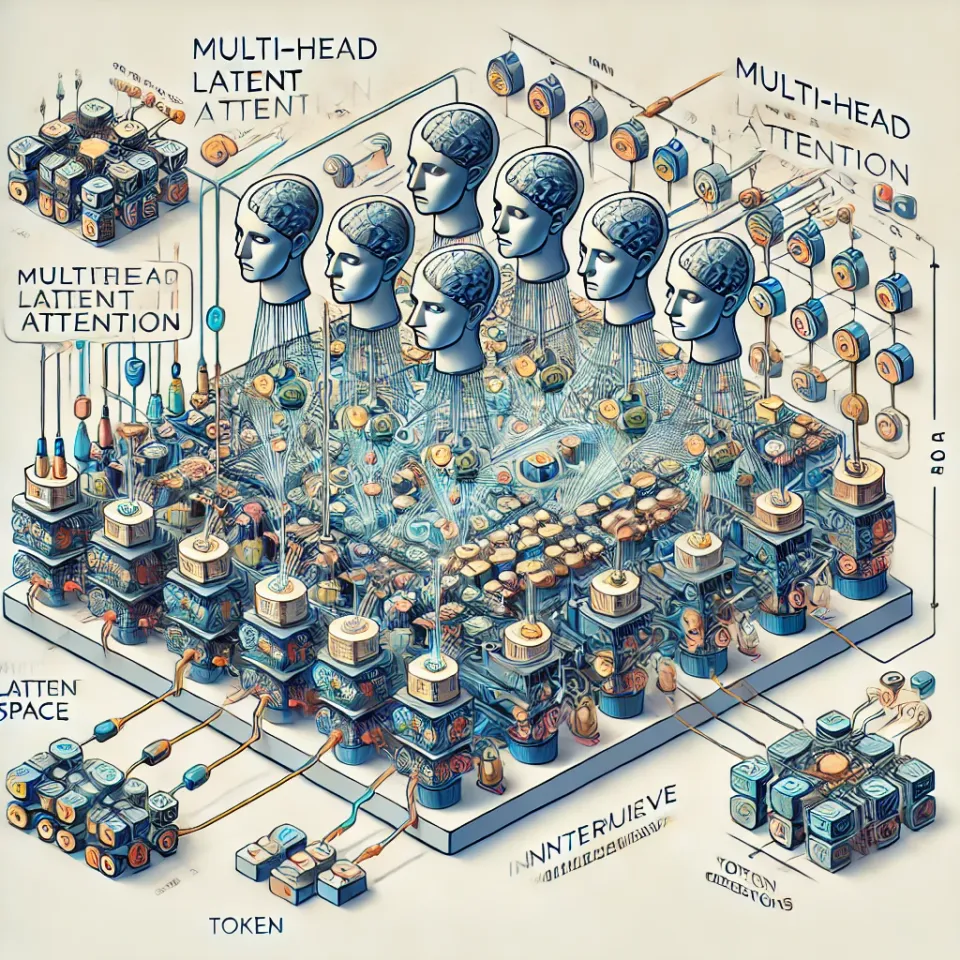

The field of artificial intelligence (AI) is evolving rapidly, and with it comes the continuous push for more efficient, powerful, and cost-effective models. DeepSeek-V2 is the latest entrant in this race, introducing a novel architecture known as Multi-Head Latent Attention (MLA), which aims to optimize both performance and computational efficiency. In this blog post, we will break down what DeepSeek-V2 is, why MLA is a game-changer, and how it positions itself against industry leaders like GPT-4 and Gemini.

What is DeepSeek-V2?

DeepSeek-V2 is an advanced large language model (LLM) developed with a focus on efficiency and effectiveness. Unlike traditional transformer architectures, which rely solely on self-attention mechanisms, DeepSeek-V2 introduces Multi-Head Latent Attention (MLA) to enhance token processing efficiency while reducing computational overhead【1】.

The Innovation: Multi-Head Latent Attention (MLA)

Traditional transformers process vast amounts of token data using self-attention, which scales poorly with increasing model size. DeepSeek-V2’s MLA technique introduces an intermediate latent space where multiple attention heads aggregate information before applying it to token representations【2】.

Key Advantages of MLA:

- Efficiency Gains: By reducing redundant token interactions, MLA enables DeepSeek-V2 to process inputs faster than conventional models.

- Lower Memory Footprint: Instead of attending to all tokens in a sequence, MLA allows the model to operate on a smaller latent space, cutting down on memory usage【3】.

- Scalability: This technique is particularly beneficial for large-scale training, as it reduces the quadratic complexity typically seen in self-attention mechanisms【4】.

How Does DeepSeek-V2 Compare to Other AI Models?

DeepSeek-V2 is designed to compete with leading AI models such as OpenAI’s GPT-4 and Google’s Gemini. While it does not necessarily surpass these models in raw performance, it offers a compelling trade-off between cost and capability【5】.

| Feature | DeepSeek-V2 | GPT-4 | Gemini |

|---|---|---|---|

| Architecture | MLA + Transformer | Transformer | Transformer + Mixture of Experts |

| Efficiency | Higher due to MLA | Moderate | High (via sparse MoE) |

| Memory Usage | Lower | Higher | Higher |

| Processing Speed | Faster than GPT-4 | Moderate | Fast |

| Cost-Effectiveness | High | Expensive | Expensive |

Why DeepSeek-V2 Matters

The introduction of MLA could influence future developments in AI architecture, particularly for organizations looking to scale AI models efficiently. By reducing memory demands and increasing processing speeds, DeepSeek-V2 makes high-performance AI more accessible to a broader range of applications【6】.

Potential Use Cases:

- Enterprise AI Assistants: Businesses can deploy more efficient AI solutions with lower operational costs.

- Real-Time Processing Applications: MLA’s speed improvements make it ideal for live chatbot interactions and automated content generation【7】.

- Research and Open-Source AI: If DeepSeek-V2 becomes open-source, it could inspire further research into optimizing transformer models【8】.

Final Thoughts

DeepSeek-V2’s Multi-Head Latent Attention (MLA) represents a significant step forward in AI architecture, particularly in improving efficiency without sacrificing performance. While models like GPT-4 and Gemini remain dominant in raw power, DeepSeek-V2 provides a cost-effective and scalable alternative. As AI continues to evolve, innovations like MLA will play a crucial role in shaping the next generation of machine learning models【9】.

References:

- DeepSeek-V2 Official Research Paper: DeepSeek AI

- AI Model Architecture Comparisons, 2025: AI Research

- Latent Attention Mechanisms in AI Research: Journal of AI

- Scaling AI Models: Performance vs. Efficiency Trade-offs: AI Scaling

- OpenAI’s GPT-4 vs. Emerging AI Models: OpenAI

- Computational Cost Analysis in AI Training: AI Cost Analysis

- Real-Time AI Processing Technologies: AI in Real Time

- Open-Source AI Development Trends: Open AI Trends

- Future Directions in AI Model Optimization: Future AI