LlamaIndex: Enabling Data-Augmented LLM Applications

Introduction

In the ever-evolving world of artificial intelligence, integrating custom data with large language models (LLMs) has become crucial for building intelligent applications. LlamaIndex stands out as a powerful data framework designed to bridge the gap between LLMs and your private data. It provides tools that make your data more accessible and usable for LLMs, paving the way for sophisticated, data-augmented LLM applications. Originally known as GPT Index, LlamaIndex evolved into its current versatile form, supporting the entire pipeline from ingesting and structuring data to retrieving the right pieces of information and integrating them into LLM-driven workflows.

According to IBM, LlamaIndex can ingest and index data from over 160 different sources and formats, ensuring that LLMs can leverage a wide variety of information beyond their training data. By augmenting LLMs with external knowledge—often referred to as retrieval-augmented generation (RAG)—LlamaIndex helps overcome the limitation that LLMs aren’t inherently aware of private or up-to-date information.

What is LlamaIndex?

LlamaIndex is an open-source data orchestration framework for LLM applications. It acts as a connector between custom data and an LLM (such as GPT-4 or other models). LLMs are typically trained on massive public datasets but lack direct access to private, domain-specific knowledge. LlamaIndex solves this by providing a structured interface to feed data into LLMs in a manageable and queryable format.

By leveraging LlamaIndex, developers can ingest and index data from multiple sources—such as documents, APIs, and websites—so that an LLM can retrieve relevant information for answering questions or performing tasks. This approach enhances the accuracy and relevance of AI-driven responses. For instance, a company can use LlamaIndex to empower a chatbot with knowledge from proprietary policy documents or databases that are outside the model’s training set.

The Visionaries Behind LlamaIndex

LlamaIndex was created by Jerry Liu (a machine learning engineer) and Simon Suo (an AI technologist) to tackle the challenge of integrating external data into LLMs. Early LLM applications faced three major hurdles:

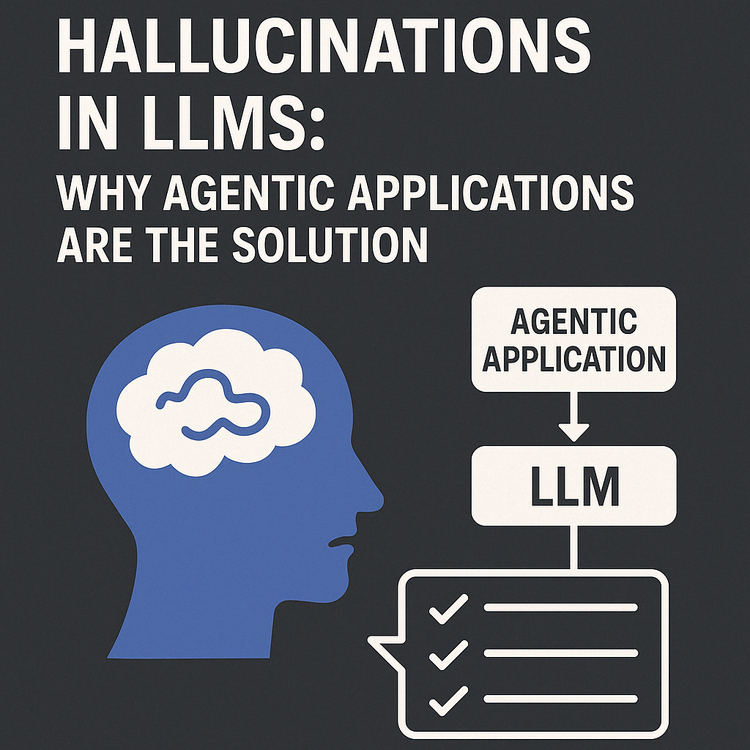

- Lack of domain knowledge: Even advanced LLMs can “hallucinate” or provide irrelevant answers without access to custom data.

- Unclear best practices: Before LlamaIndex, developers lacked standardized methods to integrate enterprise or private data into LLM workflows.

- LLM limitations: Context window constraints and the high cost of fine-tuning meant that models struggled to handle large external knowledge bases efficiently.

Since its inception, LlamaIndex has grown rapidly, attracting over 450 open-source contributors. The framework remains freely available under the MIT License, allowing developers and organizations to confidently build on it without legal restrictions.

Core Components and Features

LlamaIndex provides several core components that enable data-augmented LLM workflows:

- Data Connectors (Loaders): LlamaIndex offers a broad range of data connectors to ingest information from APIs, PDFs, SQL/NoSQL databases, web pages, images, and audio. These loaders standardize raw data into a structured format (Documents).

- Indexes: LlamaIndex structures data into retrieval-optimized indexes. A common approach is using a Vector Store Index, where text is transformed into semantic embeddings for efficient similarity search. Other index types include list-based, tree-based, and knowledge graph indexes tailored for different use cases.

- Query Engine: This interface allows users to submit natural language queries, retrieve relevant indexed data, and supply it to an LLM, ensuring responses are grounded in real information.

- Chat Engine: Built on top of the Query Engine, this feature enables multi-turn interactions by maintaining a conversation history, allowing LLMs to process follow-up questions with full context.

- Agents and Tools: LlamaIndex supports LLM-powered agents that can retrieve data, call APIs, and perform multi-step reasoning. These agents use a ReAct-style reasoning loop, allowing them to autonomously decide which tools or actions to invoke to generate the best response.

Recent Advancements in LlamaIndex

LlamaIndex has undergone rapid evolution, adding several significant features:

- Workflows System: Enables multi-step LLM interactions using loops, conditionals, and stateful chains for agent-driven applications.

- Graph RAG: Allows knowledge graphs to be used as structured context, improving accuracy in question-answering tasks.

- Improved Debugging & Observability: New tools make it easier to monitor and refine retrieval pipelines in production.

- TypeScript Support: Expands usability for JavaScript developers, enabling direct integration with web-based AI applications.

- Optimized Document Parsing: Enhancements in document chunking and embedding improve retrieval efficiency for large text datasets.

Comparison with Similar Technologies

LlamaIndex is often compared to LangChain, another LLM framework. While both facilitate LLM applications, LlamaIndex is specifically optimized for retrieval-augmented generation (RAG), making it ideal for applications requiring efficient search and retrieval. According to IBM, LlamaIndex excels at indexing and retrieving data, while LangChain provides a broader orchestration layer for multi-step LLM workflows. Many developers combine both, using LlamaIndex as a retrieval module inside a LangChain pipeline.

Best Practices and Use Cases for Developers

Optimizing Chunk Size for Retrieval

One of the most important factors in effective retrieval is chunk size. According to Pinecone:

- Small chunks (128 tokens) improve retrieval precision but may lack sufficient context.

- Larger chunks (512+ tokens) provide more context but can dilute relevance if a query matches only part of the chunk.

A hybrid approach—chunk ensembling—combines multiple chunk sizes to balance precision and contextual understanding.

Hybrid Search for Enhanced Accuracy

To maximize search relevance, hybrid retrieval combines vector similarity (semantic search) with keyword-based retrieval (BM25). According to Arize AI, this method significantly improves recall by ensuring that exact keyword matches and semantically similar content are retrieved together.

Building AI Chatbots & Search Tools

Companies like Notion have successfully implemented LlamaIndex to power context-aware chatbots and enterprise search tools. It is widely used for:

- Enterprise knowledge bases

- Legal & compliance document search

- AI-powered customer support

Integration with Other AI Tools

LlamaIndex integrates seamlessly with:

- Vector Databases: Pinecone, Weaviate, FAISS, Chroma

- LLMs: OpenAI GPT-4, Anthropic Claude, Hugging Face models

- Frameworks: LangChain, Haystack, Semantic Kernel

- Storage Solutions: PostgreSQL, Elasticsearch, Azure Blob Storage

According to Microsoft, enterprises increasingly deploy LlamaIndex on Azure AI for knowledge retrieval in large organizations.

Conclusion

LlamaIndex has revolutionized how LLMs interact with private and dynamic data, enabling AI-powered search, enterprise assistants, and knowledge-driven applications. As an open-source framework, it continues to evolve, supporting new data sources and techniques for retrieval-augmented AI.

By leveraging LlamaIndex, developers can build context-aware, data-augmented LLM applications that are more accurate, relevant, and trustworthy—ensuring AI models deliver the right information at the right time.