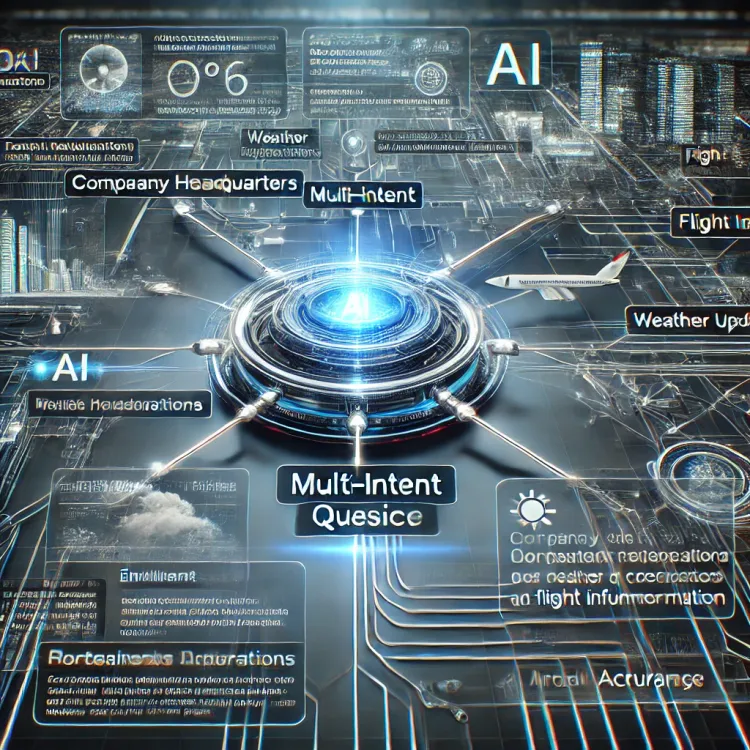

Leveraging LLM Intelligence for Multi-Intent Queries in Semantic Kernel

Handling multi-intent queries in Semantic Kernel requires intelligent entity linking. We use prompt engineering, function choice behaviors, and contextual synthesis to improve AI accuracy without hardcoded logic.

Keeping Your Vector Database Fresh: Strategies for Dynamic Document Stores

Keeping your vector database fresh ensures accurate search results and a seamless AI experience. This post explores change detection and efficient updates to keep your vector embeddings synchronized with dynamic content.

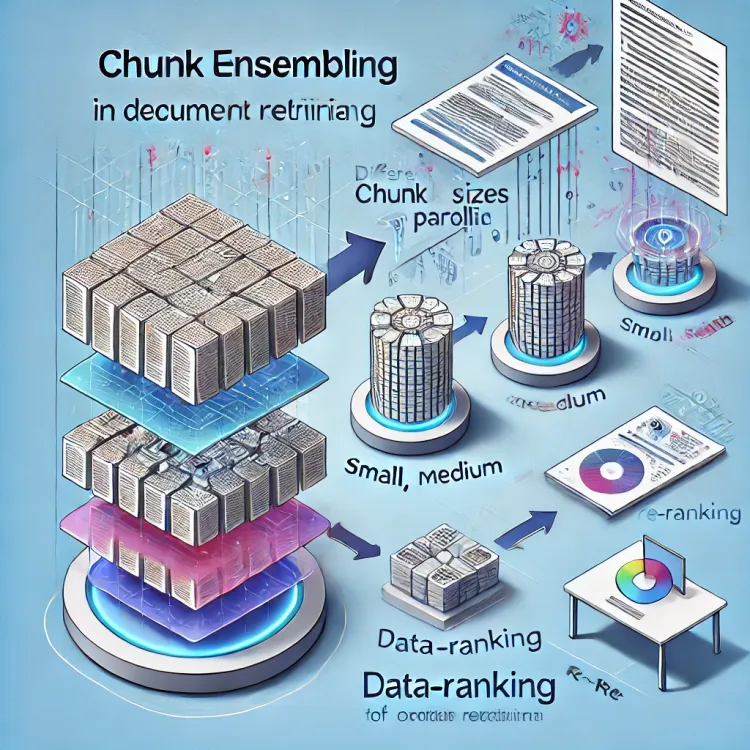

Explanation of Chunk Ensembling

Chunk Ensembling is a retrieval optimization technique that balances precision and context by retrieving multiple chunk sizes simultaneously and re-ranking

Implications of Small Chunk Sizes in Large Document Retrieval

Introduction

One of the most important factors in effective retrieval is chunk size. According to Pinecone:

* Small chunks (128 tokens)

LlamaIndex: Enabling Data-Augmented LLM Applications

In the ever-evolving world of artificial intelligence, integrating custom data with large language models (LLMs) has become crucial for building intelligent applications.

Cloud AI App

The future of AI-driven solutions is here, and we are thrilled to introduce CloudAIApp.Dev – a platform designed to revolutionize