Explanation of Distillation

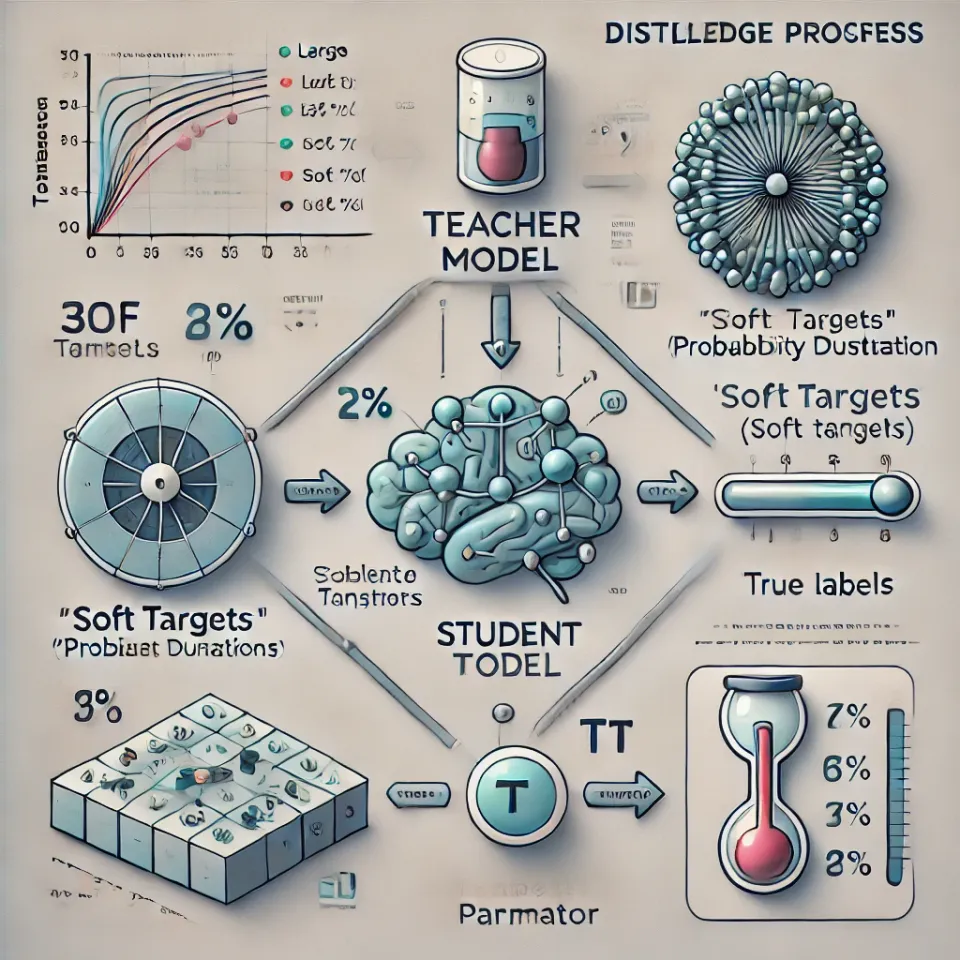

Distillation in the context of machine learning, particularly as used by companies like DeepSeek or others working with large-scale models, is a process where a smaller model (the "student") learns to replicate the performance of a larger, more complex model (the "teacher"). This technique allows for more efficient deployment of powerful models by reducing their size and computational requirements while retaining much of their original accuracy.

Here's a breakdown of how distillation typically works:

1. Teacher Model:

- A large, pre-trained model with high performance on a specific task acts as the "teacher."

- This model generates predictions, often in the form of probabilities or logits, rather than just final classifications.

2. Student Model:

- A smaller, more compact model is trained to mimic the teacher model's behavior.

- The goal is for the student model to achieve similar performance while being significantly lighter in terms of computational resources and memory.

3. Distillation Loss:

- Instead of using just the traditional supervised loss (e.g., cross-entropy), the student model is trained with a distillation loss that includes:

- Soft Target Loss: The student tries to match the teacher's probability distribution over the output classes (soft targets).

- Hard Target Loss: The student is also trained on the actual labels (ground truth), just like in traditional supervised learning.

- The combination of these losses ensures the student benefits from both the teacher's knowledge and the actual data labels.

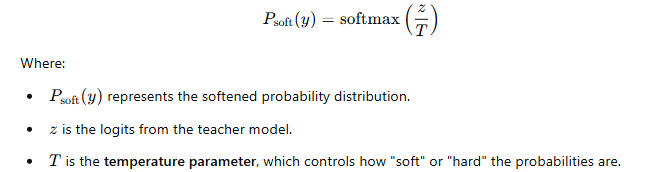

The soft targets are obtained using a temperature parameter TTT, which controls how "soft" the probabilities are:

where z is the logits from the teacher, and TTT is the temperature.

4. Training Process:

- During training, the student model learns from both the soft outputs of the teacher and the ground truth labels.

- The temperature TTT is often set higher for softer outputs, which allows the student model to learn more nuanced information.

5. Advantages:

- Efficiency: The student model is smaller and faster, suitable for deployment on edge devices or resource-constrained environments.

- Knowledge Transfer: The student captures the distilled knowledge of the teacher, which can include patterns and insights that aren't directly obvious from the raw data.

Example Applications:

- DeepSeek's Models: If DeepSeek is working on advanced NLP, vision, or multimodal tasks, they likely used distillation to scale down large foundation models like GPT-style architectures or vision transformers (ViTs) for efficient use in real-world applications.

- Custom Fine-Tuning: The student models might also be fine-tuned for domain-specific tasks after distillation, leveraging both general knowledge from the teacher and specificity from fine-tuning.

Distillation is a powerful tool in modern AI workflows, particularly when deploying large models in environments with constrained computational power, and it's a common strategy for production-grade systems.