07

May

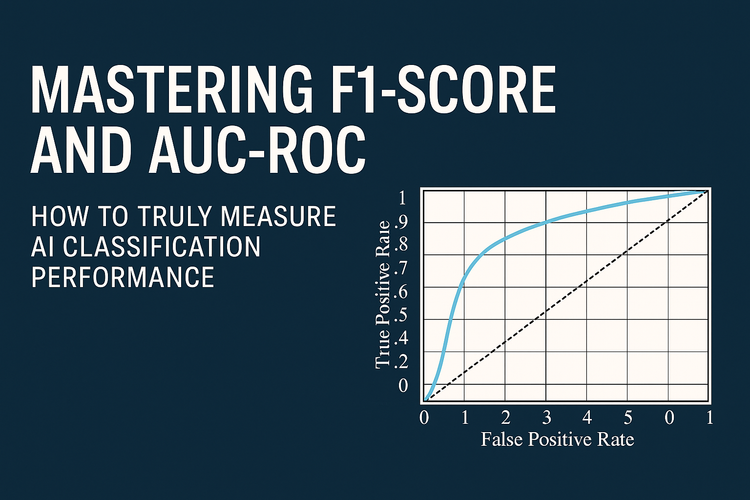

Mastering Classification Metrics: A Deep Dive from F1-Score to AUC-ROC

Accuracy lies. Learn why F1-score and AUC-ROC give a true picture of AI model performance—especially in imbalanced datasets.

18 min read